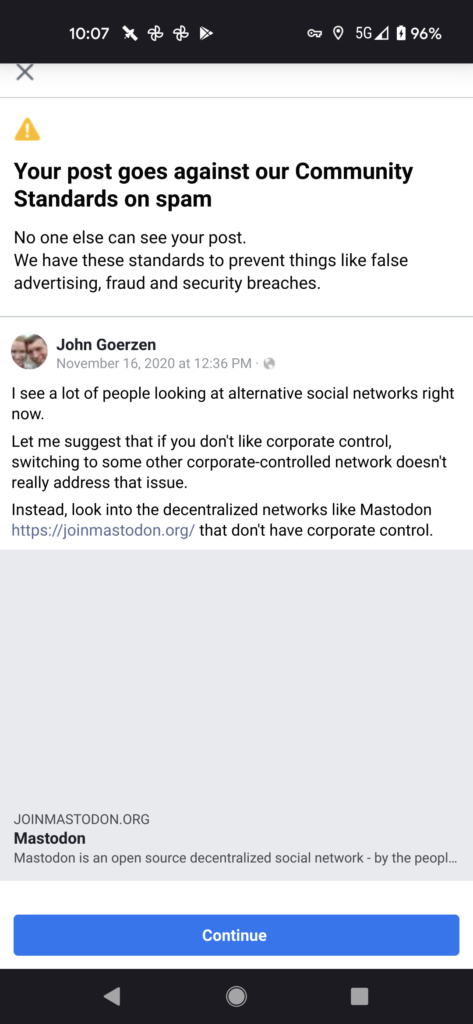

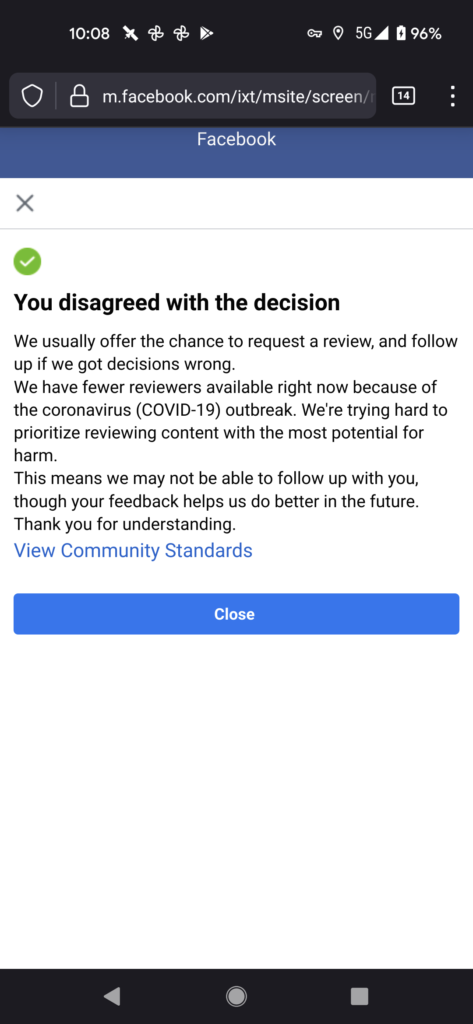

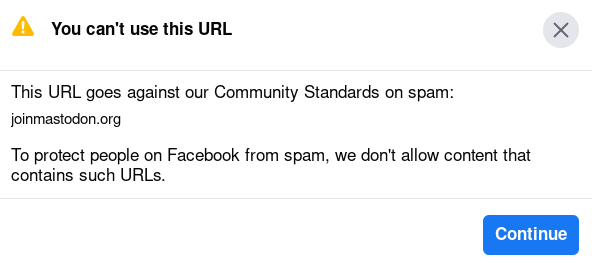

In the aftermath of my report of Facebook censoring mentions of the open-source social network Mastodon, there was a lot of conversation about whether or not this was deliberate.

That conversation seemed to focus on whether a human speficially added joinmastodon.org to some sort of blacklist. But that’s not even relevant.

OF COURSE it was deliberate, because of how Facebook tunes its algorithm.

Facebook’s algorithm is tuned for Facebook’s profit. That means it’s tuned to maximize the time people spend on the site — engagement. In other words, it is tuned to keep your attention on Facebook.

Why do you think there is so much junk on Facebook? So much anti-vax, anti-science, conspiracy nonsense from the likes of Breitbart? It’s not because their algorithm is incapable of surfacing the good content; we already know it can because they temporarily pivoted it shortly after the last US election. They intentionally undid its efforts to make high-quality news sources more prominent — twice.

Facebook has said that certain anti-vax disinformation posts violate its policies. It has an extremely cumbersome way to report them, but it can be done and I have. These reports are met with either silence or a response claiming the content didn’t violate their guidelines.

So what algorithm is it that allows Breitbart to not just be seen but to thrive on the platform, lets anti-vax disinformation survive even a human review, while banning mentions of Mastodon?

One that is working exactly as intended.

We may think this algorithm is busted. Clearly, Facebook does not. If their goal is to maximize profit by maximizing engagement, the algorithm is working exactly as designed.

I don’t know if joinmastodon.org was specifically blacklisted by a human. Nor is it relevant.

Facebook’s choice to tolerate and promote the things that service its greed for engagement and money, even if they are the lowest dregs of the web, is deliberate. It is no accident that Breitbart does better than Mastodon on Facebook. After all, which of these does its algorithm detect keep people engaged on Facebook itself more?

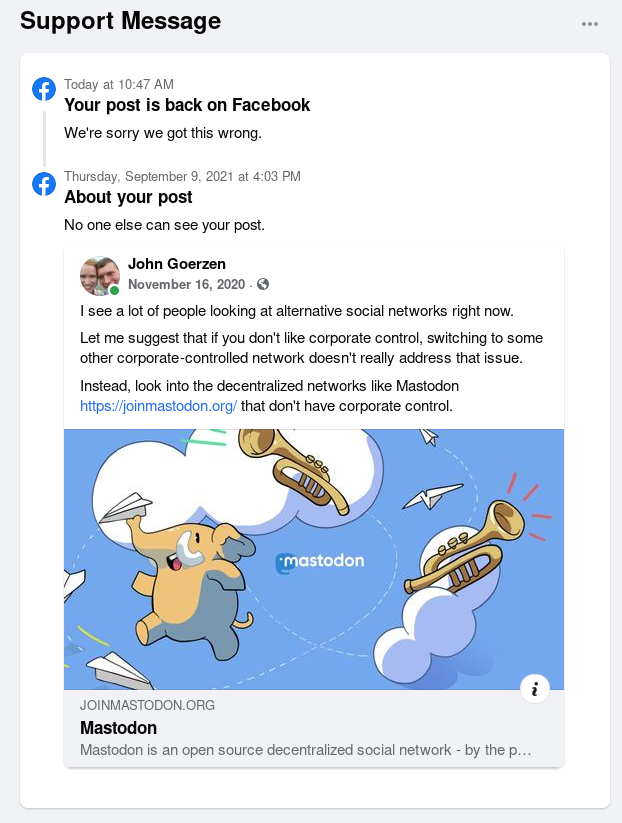

Facebook removes the ban

You can see all the screenshots of the censorship in my original post. Now, Facebook has reversed course:

We also don’t know if this reversal was human or algorithmic, but that still is beside the point.

The point is, Facebook intentionally chooses to surface and promote those things that drive engagement, regardless of quality.

Clearly many have wondered if tens of thousands of people have died unnecessary deaths over COVID as a result. One whistleblower says “I have blood on my hands” and President Biden said “they’re killing people” before “walking back his comments slightly”. I’m not equipped to verify those statements. But what do they think is going to happen if they prioritize engagement over quality? Rainbows and happiness?

Update 2024-04-06: It’s happened again. Facebook censored my post about the Marion County Record because the same site was critical of Facebook censoring environmental stories.