In the aftermath of my report of Facebook censoring mentions of the open-source social network Mastodon, there was a lot of conversation about whether or not this was deliberate.

That conversation seemed to focus on whether a human speficially added joinmastodon.org to some sort of blacklist. But that’s not even relevant.

OF COURSE it was deliberate, because of how Facebook tunes its algorithm.

Facebook’s algorithm is tuned for Facebook’s profit. That means it’s tuned to maximize the time people spend on the site — engagement. In other words, it is tuned to keep your attention on Facebook.

Why do you think there is so much junk on Facebook? So much anti-vax, anti-science, conspiracy nonsense from the likes of Breitbart? It’s not because their algorithm is incapable of surfacing the good content; we already know it can because they temporarily pivoted it shortly after the last US election. They intentionally undid its efforts to make high-quality news sources more prominent — twice.

Facebook has said that certain anti-vax disinformation posts violate its policies. It has an extremely cumbersome way to report them, but it can be done and I have. These reports are met with either silence or a response claiming the content didn’t violate their guidelines.

So what algorithm is it that allows Breitbart to not just be seen but to thrive on the platform, lets anti-vax disinformation survive even a human review, while banning mentions of Mastodon?

One that is working exactly as intended.

We may think this algorithm is busted. Clearly, Facebook does not. If their goal is to maximize profit by maximizing engagement, the algorithm is working exactly as designed.

I don’t know if joinmastodon.org was specifically blacklisted by a human. Nor is it relevant.

Facebook’s choice to tolerate and promote the things that service its greed for engagement and money, even if they are the lowest dregs of the web, is deliberate. It is no accident that Breitbart does better than Mastodon on Facebook. After all, which of these does its algorithm detect keep people engaged on Facebook itself more?

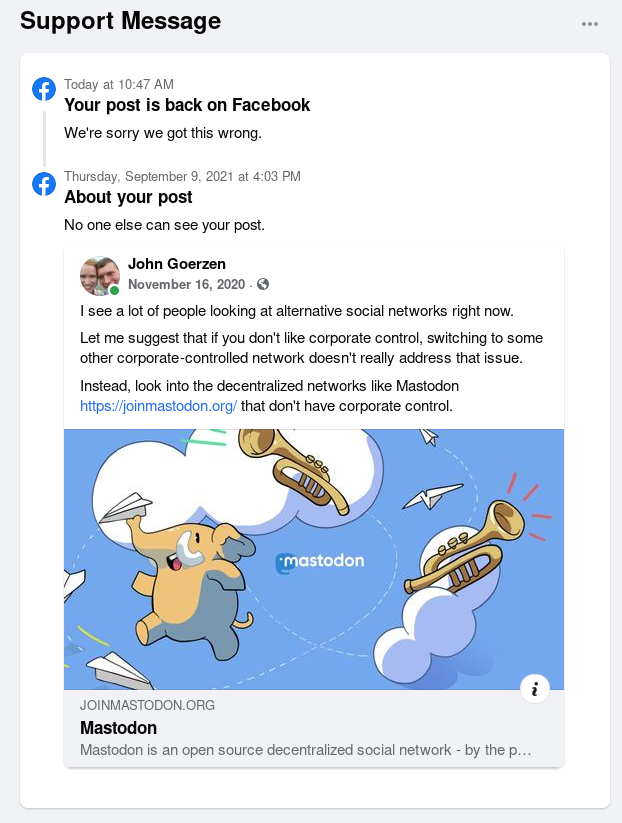

Facebook removes the ban

You can see all the screenshots of the censorship in my original post. Now, Facebook has reversed course:

We also don’t know if this reversal was human or algorithmic, but that still is beside the point.

The point is, Facebook intentionally chooses to surface and promote those things that drive engagement, regardless of quality.

Clearly many have wondered if tens of thousands of people have died unnecessary deaths over COVID as a result. One whistleblower says “I have blood on my hands” and President Biden said “they’re killing people” before “walking back his comments slightly”. I’m not equipped to verify those statements. But what do they think is going to happen if they prioritize engagement over quality? Rainbows and happiness?

Update 2024-04-06: It’s happened again. Facebook censored my post about the Marion County Record because the same site was critical of Facebook censoring environmental stories.

Update: Facebook has reversed itself over this censorship, but I maintain that whether the censorship was algorithmic or human, it was intentional either way. Details in my new post.

Last November, I made a brief post to Facebook about Mastodon. Mastodon is an open-source and open social network, which is decentralized and all about user control instead of corporate control. I’ve blogged about Mastodon and the dangers of Facebook before, but rarely mentioned Mastodon on Facebook itself.

Today, I received this notice that Facebook had censored my post about Mastodon:

Wonder with me for a second what this one-off post I composed myself might have done to trip Facebook’s filter…. and it is probably obvious that what tripped the filter was the mention of an open source competitor, even though Facebook is much more enormous than Mastodon. I have been a member of Facebook for many years, and this is the one and only time anything like that has happened.

Why they decided today to take down that post – I have no idea.

In case you wondered about their sincerity towards stamping out misinformation — which, on the rare occasions they do something about, they “deprioritize” rather than remove as they did here — this probably answers your question. Or, are they sincere about thinking they’re such a force for good by “connecting the world’s people?” Well, only so long as the world’s people don’t say nice things about alternatives to Facebook, I guess.

“Well,” you might be wondering, “Why not appeal, since they obviously made a mistake?” Because, of course, you can’t:

Indeed I did tick a box that said I disagreed, but there was no place to ask why or to question their action.

So what would cause a non-controversial post from a long-time Facebook member that has never had anything like this happen, to disappear?

Greed. Also fear.

Maybe I’d feel sorry for them if they weren’t acting like a bully.

Edit: There are reports from several others on Mastodon of the same happening this week. I am trying to gather more information. It sounds like it may be happening on Twitter as well.

Edit 2: And here are some other reports from both Facebook and Twitter. Definitely not just me.

Edit 3: While trying to reply to someone on Facebook, that was trying to defend Facebook, I mentioned joinmastodon.org and got this:

Anyone else seeing it?

Edit 4: It is far more than just me, clearly. More reports are out there; for instance, this one and that one.

Facebook is an information warfare platform dressed up as “a bit of harmless fun” and “a convenient way of keeping in touch with friends and family”. People get upset when this is pointed out (“I don’t see any of that bad stuff, my family loves it”), but maybe they should really be upset that Facebook was, for example, quite happy to let advertisers target “people interested in ‘vaccine controversies'”:

https://www.theguardian.com/technology/2019/feb/15/facebook-anti-vaccination-advertising-targeting-controversy

And if you read that article, you’ll find worse than that in there.

Of course, back in 2019, information warfare via Facebook had already caused deaths from preventable diseases, but I guess a bunch of rich kids in the Valley didn’t care because all the victims were thousands of miles away in some developing world country, and I guess that a bit a performative philanthropy in front of a compliant, fawning media will always clear up today’s public relations crisis.

But malign things have a habit of getting out of control and becoming even more malevolent. In a global pandemic we might expect some reflection as those distant problems turn up rather closer to home. Instead, we still get disingenuous fake intellectualism about “freedom of speech” on proprietary platforms that are neither obliged to uphold such freedoms nor responsible for the content their platforms expose to their users, all while the money pours in to actively target those users, facilitated by the platform and its architects.

How did it go? The only way to win is not to play? Taking down the mainframe would work, too.

@jgoerzen fascinating – I shared your post on FB (as part of a thread in which I called out their blocking of joinmastodon.org, which I’d also experienced first hand) to say that apparently FB had reconsidered their blockage… and right after (successfully) posting it… I got a notification that my *original* post with joinmastodon.org had be *re-blocked* for reasons that are not clear… Crazy.

@lightweight @jgoerzen This story keeps getting better.

@lightweight Can you share screenshots, perchance?

@jgoerzen Money needs to make #money.

money

@lightweight @jgoerzen Would be good evidence if the DOJ ever initiated an antitrust investigation.

@jgoerzen Like this promo! I am using it for the first time, so am eager to learn more about it.

I recommended some try AntiX Linux on Facebook and was told I violated their policies by doing so.

Other platforms have done it too – than reversed their decision:

2021: “Facebook Is Censoring People For Mentioning Open-Source Social Network Mastodon”

changelog.complete.org/archives/10285…

changelog.complete.org/archives/10303…

Why did Facebook do it?

2021: “Facebook Is Censoring People For Mentioning Open-Source Social Network Mastodon”

changelog.complete.org/archives/10285…

changelog.complete.org/archives/10303…

It is, sadly, not entirely surprising that Facebook is censoring articles critical of Meta. The Kansas Reflector published an artical about Meta censoring environmental articles…